The platform technology wave announced in 2012 brings a number of innovations in the ,NET and Microsoft platform at all;no question. One of my personal favorites is the peace of Platform which makes push-scenario easy adoptable. In the time of upcoming cloud patterns, there are more business and technical scenarios which require server/service to push the data to the client. Some of these technologies are to find around WebSockets and SignalR. My intension is not in this post to explain the need for “push”-demand. Assuming that this is clear I would like to provide a native an interesting example, which shows how push scenarios can be achieved by using of simple HTTP.

To demonstrate this I decided to avoid technologies like WebApi and to implement one example on the native ASP.NET. This in nothing against WebApi which I really like. If you like you can implement the same scenario based on WebApi or WCF.. However WebApi and WCF are hardly message driven by design. My scenario is nativelly stream driven and because of that it fits a bit better for this case.

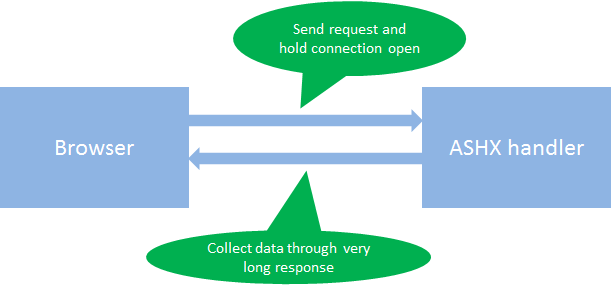

Basically I want to send the request from some HTTP capable client (i.e browser) to the ASP.NET handler, which fulfills following:

1. The request cannot be completed synchronously

This means we will process request asynchronously.

2.. The amount of data sent to the client is huge stream.

This will hold an open connection between client and server. We will push stream of data through this connection from Server to client.

Interestingly, both WebSockets and SignalR makes usage of this two requirements.The WebSockets goes hard way and extend the HTTP protocol to make this working. The SignalR uses standard HTTP and makes usage of 4 transports (ServerSentEvents, ForewerFrames, Polling and WebSockets).

My idea in this post is to show how to natively implement similar scenario by yourself on top of ASP.NET. Then I want to open the browser, type in the URL and expect to continually receive some data from server for very long time without of getting a time-out. Following picture illustrate this scenario:

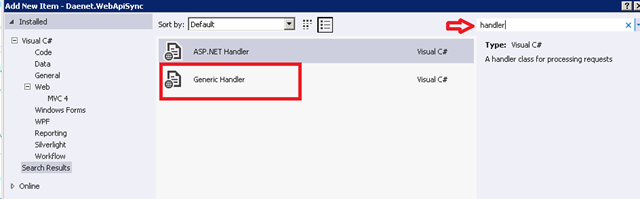

As first I will create the Web-Project of any type (Forms, MVC, Empty). The go to add project item and select generic handler:

This will create an empty ASHX handler:

/// <summary>

/// Summary description for Handler1

/// </summary>

public class MyStreamHandler : IHttpHandler

{

public void ProcessRequest(HttpContext context)

{

context.Response.ContentType = "text/plain";

context.Response.Write("Hello World");

}

public bool IsReusable

{

get

{

return false;

}

}

}

This is a typical ASHX handler which you probably already know. Now change this implementation as follows:

public override async Task ProcessRequestAsync(HttpContext context)

{

}

We basically replace implementation with the HttpTaskAsyncHandler which is the async version of handler. Now I will implement some code which periodically writes some data to opened request stream. In fact, physically every request causes ASP.NET to create corresponding response stream context.Response . That means common request-response, as we know it, is anyhow a stream in its deep nature. What I did in the code bellow, is async processing of the request:

|

using System;

using System.Collections.Generic;

using System.Linq;

using System.Threading;

using System.Threading.Tasks;

using System.Web;

namespace Daenet.WebApiSync

{

/// <summary>

/// Summary description for MyStreamHandler

/// </summary>

public class MyStreamHandler : HttpTaskAsyncHandler

{

#region IHttpHandler Members

public override async Task ProcessRequestAsync(HttpContext context)

{

context.ThreadAbortOnTimeout = false;

await doJobAsync(context);

}

private Task doJobAsync(HttpContext context)

{

Task t = new Task(() =>

{

for (int n = 0; n < 100; n++)

{

context.Response.Write(String.Format("Sequence: {0} <br/>", n));

context.Response.Flush();

Thread.Sleep(500);

}

});

t.Start();

return t;

}

#endregion

}

}

|

When the browser or any other client sends the request to the ASHX handler we immediately returns in async manner and frees the request thread. In that moment, the browser (any client) starts reading of streamed data, which is generated in doJobAsync. Note that data will not be streamed if Flush() is not called.

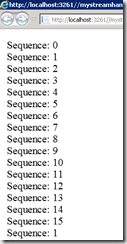

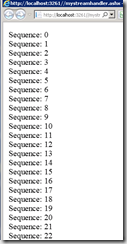

To test this code, open some browser and type the URL of the MyStreamHandler.ASHX:

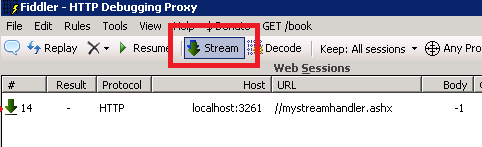

Open the fiddler and notice the request remains open for a long time. Unfortunately ASP.NET will abort request after some time if it doesn’t complete. Usually all request are processed synchronously in more or less short time and no any developer runs into this problem. However when working with async pattern you possible process longer running jobs. In that case following line of code is required:

context.ThreadAbortOnTimeout = false;

This prevents ASP.NET pipeline to abort request which are not completed Without this line, this scenario will only work as long the request thread is not aborted.

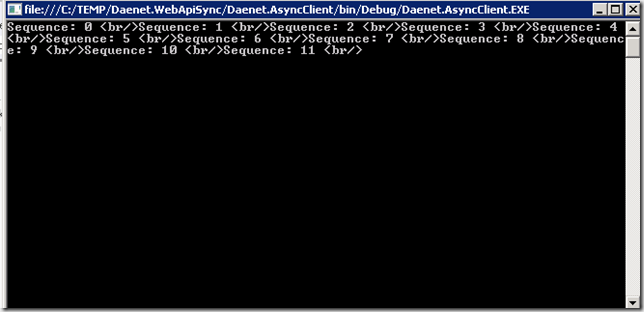

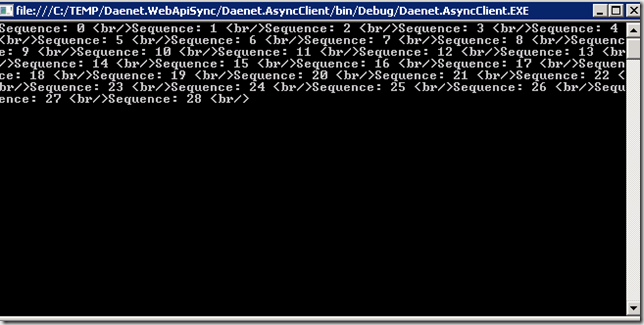

The result in browser would look like shown at pictures below. As you see browser continually receives more and more data.

Working with Fiddler and Proxies

If you work with fiddler, pleas also notice following:

If the streaming is not enabled in Fiddler (default is OFF) the client will receive all data at once after the data are streamed into the response stream. Btw. if you work with SignalR and Fiddler you will also have to enable streaming. If you don’t do that, the SignalR will switch to the polling transport.

Console Client Application

To demonstrate the same within another HTTP client, I decided to build Console Application. Following peace of code shows the client which receives stream of data.

|

WebClient client = new WebClient();

Stream str = client.OpenRead(new Uri("http://localhost:3261/mystreamhandler.ashx"));

byte[] buff = new byte[100];

while (true)

{

var task = str.ReadAsync(buff, 0, buff.Length);

task.Wait();

int readBytes = task.Result;

traceout(buff, readBytes);

}

|

The result of console application shows how the application is receiving the data over longer period of time:

Posted

Aug 20 2012, 09:20 AM

by

Damir Dobric